Quantum Mechanics: The Death of Local Realism

From Pantheism to Monotheism

Charlie had covered a lot of ground by this point. He’d started in the age of mythology, at the dawn of civilization, looking at the cultural and socio-political forces that underpinned and supported the local mythology and priesthood classes of the ancient civilizations, and some of the broader themes that crossed different cultures around creation mythology, particularly in the Mediterranean region which drove Western civilization development. There was most certainly a lot more rich academic and historical (and psychological) material to cover when looking at the mythology of the ancients but Charlie thought he had at least covered a portion of it and hit the major themes – at least showed how this cultural and mythological melting pot led to the predominance of the Abrahamic religions in the West, and the proliferation of Buddhism, Hinduism and Taoism in the East.

As civilizations and empires began to emerge in the West, there was a need, a vacuum if you will, for a theological or even religious binding force to keep these vast empires together. You saw it initially with the pantheon of Egyptian/Greek/Roman gods that dominated the Mediterranean in the first millennium BCE, gods who were synthesized and brought together as the civilizations from which they originated were brought together and coalesced through trade and warfare. Also in the first millennium BCE we encountered the first vast empires, first the Persian then the Greek, both of which not only facilitated trade throughout the region but also drove cultural assimilation as well.

In no small measure out of reaction to what was considered dated or ignorant belief systems, belief systems that merely reinforced the ruling class and were not designed to provide real true insight and liberation for the individual, emerged the philosophical systems of the Greeks, reflecting a deep seated dissatisfaction with the religious and mythological systems of the time, and even the political systems that were very much integrated with these religious structures, to the detriment of society at large from the philosophers perspective. The life and times of Socrates probably best characterizes the forces at work during this period, from which emerged the likes of Plato and Aristotle who guided the development of the Western mind for the next 2500 years give or take a century.

Jesus’s life in many respects runs parallel to that of Socrates, manifesting and reacting to the same set of forces that Socrates reacts to, except slightly further to the East and within the context of Roman (Jewish) rule rather than Greek rule but still reflecting the same rebellion against the forces that supported power and authority. Jesus’s message was lost however, and survives down to us through translation and interpretation that undoubtedly dilutes his true teaching, only the core survives. The works of Plato and Aristotle survive down to us though so we can analyze and digest their complete metaphysical systems that touch on all aspects of thought and intellectual development; the scope of Aristotle’s epistêmai.

In the Common Era (CE), the year of the Lord so to speak (AD), monotheism takes root in the West, coalescing and providing the driving force for the Roman Empire and then the Byzantine Empire that followed it, and then providing the basis of the Islamic Conquests and their subsequent Empire, the Muslims attesting to the same Abrahamic traditions and roots of the Christian and the Jews (of which Jesus was of course one, a fact Christians sometimes forget). Although undoubtedly monotheism did borrow and integrate from the philosophical traditions that preceded it, mainly to justify and solidify their theological foundations for the intellectually minded, with the advent of the authority of the Church which “interpreted” the Christian tradition for the good of the masses, you find a trend of suppression of rational or logical thinking that was in any way inconsistent with the Bible, the Word of God, or in any way challenged the power of the Church. In many respects, with the rise in power and authority of the Church we see an abandonment of the powers of the mind, the intellect, which were held so fast and dear to by Plato and Aristotle. Reason was abandoned for faith as it were, blind faith in God. The Dark Ages came and went.

The Scientific Revolution

Then, another revolution takes place, one that unfolds in Western Europe over centuries and covers first the Renaissance, then the Scientific Revolution and the Age of Enlightenment, where printing and publishing start to make many ancient texts and their interpretations and commentary available to a broader public, outside of the monasteries. This intellectual groundswell provided the spark that ended up burning down the blind faith in the Bible, and the Church that held its literal interpretation so dear. Educational systems akin to colleges, along with a core curriculum of sorts (scholasticism) start to crop up in Western Europe in the Renaissance and Age of Enlightenment, providing access to many of the classic texts and rational frameworks to more and more learned men, ideas and thoughts that expanded upon mankind’s notion of reason and its limits, and its relationship to theology and society, begin to be exchanged via letters and published works in a way that was not possible prior. This era of intellectual growth culminates in the destruction of the geocentric model of the universe, providing the crucial blow into the foundations of all of the Abrahamic religions and laying the foundation for the predominance of science (natural philosophy) and reason that marked the centuries that followed and underpins Western civilization to this day.

Then came Copernicus, Kepler, Galileo and Newton, with many great thinkers in between of course, alongside the philosophical and metaphysical advancements from the likes of Descartes and Kant among others, establishing without question empiricism, deduction and scientific method as the guiding principles behind which knowledge and reality must be based and providing the philosophical basis for the political revolutions that marked the end of the 18th century in France, England and America.

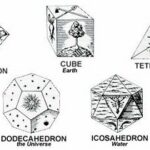

The geometry and astronomy of the Greeks as it turned out, Euclid and Ptolemy in particular, provided the mathematical framework within which the advancements of the Scientific Revolution were made. Ptolemy’s geocentric model was upended no doubt, but his was the model that was refuted in the new system put forth by Copernicus some 15 centuries later, it was the reference point. And Euclid’s geometry was superseded, expanded really, by Descartes’s model, i.e. the Cartesian coordinate system, which provided the basis for analytic geometry and calculus, the mathematical foundations of modern physics that are still with us today.

The twentieth century saw even more rapid developments in science and in physics in particular, with the expansion of Newtonian physics with Einstein’s Theory of Relativity in the early 21st century, and then with the subsequent advancement of Quantum Theory which followed close behind which provides the theoretical foundation for the digital world we live in today[1].

But the Scientific Revolution of the 17th, 18th and 19th centuries did not correspond to the complete abandonment of the notion of an anthropomorphic God. The advancements of this period of Western history provided more of an extension of monotheism, a more broad theoretical and metaphysical framework within which the God was to be viewed, rendering the holy texts not obsolete per se but rendering them more to the realm of allegory and mythology, and most certainly challenging the literal interpretations of the Bible and Qur’an that had prevailed for centuries.

The twentieth century was different though. Although you see some scattered references to God (Einstein’s famous quotation “God does not play dice” for example), the split between religion and science is cemented in the twentieth century. The analytic papers and studies that are done, primarily by physicists and scientists, although in some cases have a metaphysical bent or at least some form of metaphysical interpretation (i.e. what do the theories imply about the underlying reality which they intend to explain), leave the notion of God out altogether, a marked contrast to the philosophers and scientists of the Scientific Revolution some century or two prior within which the notion of God, as perceived by the Church, continued to play a central role if only in terms of the underlying faith of the authors.

The shift in the twentieth century however, which can really only be described as radical even though its implications are only inferred and rarely spoken of directly, is the change of faith from an underlying anthropomorphic entity/deity that represents the guiding force of the universe and mankind in particular, to a faith in the idea that the laws of the universe can be discovered, i.e. that they exist eternally, and that these laws themselves are paramount relative to religion or theology which does not rest on an empirical foundation. Some Enlightenment philosophers of course would take issue with this claim, but twentieth century science was about what could be proven experimentally in the physical world, not about what could be the result of reason or logical constructs.

This faith, this transformation of faith from religion toward science as it were, is implicit in all the scientific developments of the twentieth century, particularly in the physics community, where it it is fair to say that any statement or position of the role of God in science reflected ignorance, ignorance of the underlying framework of laws that clearly governed the behavior of “things”, things which were real and which could be described in terms of qualities such as mass, energy, momentum, velocity, trajectory, etc. These constructs were much more sound and real than the fluff of the philosophers and metaphysicians, where mind and reason, and in fact perception, was on par with the physical world to at least some extent. Or were they?

In this century of great scientific advancement, advancement which fundamentally transforms the world within which we live, facilitating the development of nuclear energy, atomic bombs, and digital computer technology to name but a few of what can only be described as revolutionary advancements of the twentieth century, and in turn in many respects drives tremendous economic progress and prosperity throughout the modern industrialized world post World War II, it is science driven at its core by advanced mathematics, which emerges as the underlying truth within which the universe and reality is to be perceived. Mathematical theories and their associated formulas that predicted the datum and behavior of not only the objective reality of the forces that prevailed on our planet, but also explained and predicted the behavior of grand cosmological forces; laws which describe the creation and motion of the universe and galaxies, the motion of the planets and the stars, laws that describe the inner workings of planetary and galaxy formation, stars and black holes.

And then to top things off, in the very same century we find that in the subatomic realm the world is governed by a seemingly very different set of laws, laws which appear fundamentally incompatible with the laws that govern the “classical world”. With the discovery of quantum theory and the ability to experimentally verify its predictions, we begin to understand the behavior of the subatomic realm, a fantastic, mysterious and extraordinary (and seemingly random) world which truly defies imagination. A world where the notion of continuous existence itself is called into question. The Ancient Greek philosophers could have never foreseen wave particle duality, no scientist before the twentieth century could. The fabric of reality was in fact much more mysterious than anyone could have imagined.

From Charlie’s standpoint something was lost here though as these advancements and “discoveries” were made. He believed in progress no doubt, the notion that civilization progresses ever forward and that there was an underlying “evolution” of sorts that had taken place with humanity over the last several thousand years, but he did believe that some social and/or theological intellectual rift had been created in the twentieth century, and that some sort of replacement was needed. Without religion the moral and ethical framework of society is left governed only by the rule of law, a powerful force no doubt and perhaps grounded in an underlying sense of morality and ethics but the personal foundation of morality and ethics had been crushed with the advent of science from Charlie’s perspective, flooding the world into conflict and materialism, despite the economic progress and greater access to resources for mankind at large. It wasn’t science’s fault per se, but it was left up to the task of the intellectual community at large to find a replacement to that which had been lost from Charlie’s view. There was no longer any self-governing force of “do good to thy neighbor” that permeated society anymore, no fellowship of the common man, what was left to shape our world seemed to be a “what’s in it for me” and a “let’s see what I can get away with” attitude, one that flooded the court systems of the West and fueled radical religious groups and terrorism itself, leading to more warfare and strife rather than peace and prosperity which was supposed to be the promise of science wasn’t it? With the loss of God, his complete removal from the intellectual framework of Western society, there was a break in the knowledge and belief in the interconnectedness of humanity and societies at large, and Quantum Mechanics called this loss of faith of interconnectedness directly into question from Charlie’s perspective. If everything was connected, entangled, at the subatomic realm, if this was a proven and scientifically verified fact, how could we not take the next logical step and ask what that meant to our world-view? “That’s a philosophical problem” did not seem to be good enough for Charlie.

Abandonment of religion for something more profound was a good thing no doubt, but what was it that people really believed in nowadays in the Digital Era? That things and people were fundamentally separate, that they were operated on by forces that determined their behavior, and that the notion of God was for the ignorant and the weak and that eventually all of the underlying behavior and reality could be described within the context of the same science which discovered Relativity and Quantum Mechanics. Or worse that these questions themselves were not of concern, that our main concern is the betterment of ourselves and our individual families even if that meant those next to us would need to suffer for our gain? Well where did that leave us? Where do ethics and morals fit into a world driven by greed and self-promotion?

To be fair Charlie did see some movement toward some sort of more refined theological perspective toward the end of the twentieth century and into the 21st century, as Yoga started to become more popular and some of the Eastern theo-philosophical traditions such as Tai Chi and Buddhism start to gain a foothold in the West, looked at perhaps as more rational belief systems than the religions of the West which have been and remain such a source of conflict and disagreement throughout the world. And the driving force for this adoption of Yoga in the West seemed to be more aligned with materialism and self-gain than it was for spiritual advancement and enlightenment, Charlie didn’t see this Eastern perspective permeating into broader society, it wasn’t being taught in schools, the next generation, the Digital Generation, will be more materialistic than its predecessors, theology was relegated to the domain of religion and in the West this wasn’t even fair game to teach in schools anymore.

The gap between science and religion that emerged as a byproduct of the Scientific Revolution remained significant, the last thing you were going to find were scientists messing around with the domain of religion, or even theology for that matter. Metaphysics maybe, in terms of what the developments of science said about reality, but most certainly not theology and definitely not God. And so our creation myth was bereft of a creator – the Big Bang had no actors, simply primal nuclear and subatomic forces at work against particles that expanded and formed gases and planets and ultimately led to us, the thinking, rational human mind who was capable of contemplating and discovering the laws of the universe and question our place in them, all a byproduct of natural selection, the guiding force was apparently random chance, time, and the genetic encoding of the will to survive as a species.

Quantum Physics

Perhaps quantum theory, quantum mechanics, could provide that bridge. There are some very strange behaviors that have been witnessed and modeled (and proven by experiment) at the quantum scale, principles that defy our notions of space and time that were cemented in the beginning of the twentieth century by Einstein and others. So Charlie dove in to quantum mechanics to see what he could find and where it led. For if there were gods or heroes in our culture today, they were the Einsteins, Bohrs, Heisenbergs and Hawkings of our time that defined our reality and determined what the next generation of minds were taught, those that broke open the mysteries of the universe with their minds and helped us better understand the world we live in. Or did they?

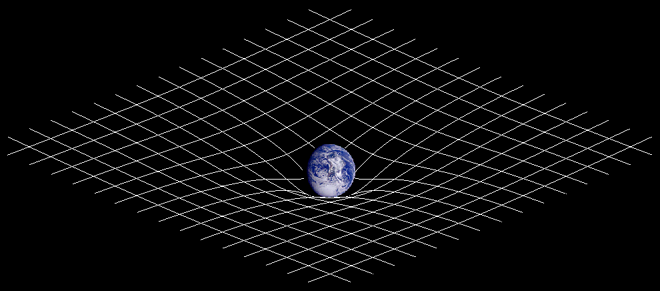

From Charlie’s standpoint, Relativity Theory could be grasped intellectually by the educated, intelligent mind. You didn’t need advanced degrees or a deep understanding of complex mathematics to understand that at a very basic level, Relativity Theory implied that Mass and Energy were equivalent, related by the speed of light that moved at a fixed speed no matter what your frame of reference, that space and time were not in fact separate and distinct concepts, that our ideas of three dimensional Cartesian space were inadequate for describing the world around us at the cosmic scale, that they were correlated concepts and are more accurately grouped together in the notion of spacetime which more accurately describes the motion and behavior of everything in the universe, more accurately than the theorems devised by Newton at least.

Relativity says that even gravity’s effect was subject to the same principles that played out at the cosmic scale, i.e. that spacetime “bends” at points of singularity (black holes for example), bends to the extent that light in fact is impacted by the severe gravitational forces at these powerful places in the universe. And indeed that our measurements of time and space were “relative”, relative to the speed and frame of reference from which these measurements were made, the observer was in fact a key element in the process of measurement. Although Relativity represented a major step in metaphysical or even scientific approach that expanded our notions of how the universe around us could be described, it still left us with a deterministic and realist model of the universe.

But at their basic, core level, these concepts could be understood, grasped as it were, by the vast majority of the public, even if they had very little if any bearing on their daily lives and didn’t fundamentally change or shift their underlying religious or theological beliefs, or even their moral or ethical principles. Relativity was accepted in the modern age, it just didn’t really affect the subjective frame of reference, the mental or intellectual frame of reference, within which the majority of humanity perceived the world around them. It was relegated to the realm of physics and a problem for someone else to consider and at best, a problem which needed to be understood to pass a physics or science exam in high school or college, to be buried in your consciousness in lieu of our more pressing daily and life pursuits be they family, career and money, or other forms of self-preservation in the modern, Digital era; an era most notably marked by materialism, self-promotion and greed.

Quantum Theory was different though. Its laws were more subtle and complex than the world described by classical physics, the world described in painstaking mathematical precision by Newton, Einstein and others. And after a lot of studying and research, the only conclusion that Charlie could definitively come to was that in order to understand quantum theory, or at least try to come to terms with it, a wholesale different perspective on what reality truly was, or at the very least how reality was to be defined, was required. In other words, in order to understand what quantum theory actually means, or in order to grasp the underlying intellectual context within which the behaviors of the underlying particles/fields that quantum theory describes were to be understood, a new framework of understanding, a new description of reality, must be adopted. What was real, as understood by classical physics which had dominated the minds of humankind for centuries, needed to be abandoned, or at the very least significantly modified, in order for quantum theory to be comprehended by any mind, or any mind that had spent any time struggling with quantum theory and trying to grasp it. Things would never be the same from a physics perspective, this much was clear, whether or not the daily lives of the bulk of those who struggle to survive in the civilized world would evolve along with them in concert with these developments remained to be seen.

Quantum Mechanics, also known as quantum physics or simply Quantum Theory, is the branch of physics that deals with the behavior or particles and matter in the atomic and subatomic realms, or quantum realm so called given the quantized nature of “things” at this scale (more on this later). So you have some sense of scale, an atom is 10-8 cm across give or take, and the nucleus, or center of an atom, which is made up of what we now call protons and neutrons, is approximately 10-12 cm across. An electron or a photon, the name we give for a “particle” of light”, cannot truly be “measured” from a size perspective in terms of classical physics for many of the reasons we’ll get into below as we explore the boundaries of the quantum world but suffice it to say at present our best guess at the estimate of the size of an electron are in the range of 10-18 cm or so[2].

Whether or not electrons, or photons (particles of light) for that matter, really exist as particles whose physical size, and/or momentum can be actually “measured” is not as straightforward a question as it might appear and gets at some level to the heart of the problem we encounter when we attempt to apply the principles of “existence” or “reality” to the subatomic realm, or quantum realm, within the context of the semantic and intellectual framework established in classical physics that has evolved over the last three hundred years or so; namely as defined by independently existing, deterministic and quantifiable measurements of size, location, momentum, mass or velocity.

The word quantum comes from the Latin quantus, meaning “how much” and it is used in this context to identify the behavior of subatomic things that move from and between discrete states rather than a continuum of values or states as is presumed in classical physics. The term itself had taken on meanings in several contexts within a broad range of scientific disciplines in the 19th and early 20th centuries, but was formalized and refined as a specific field of study as “quantum mechanics” by Max Planck at the turn of the 20th century and represents the prevailing and distinguishing characteristic of reality at this scale.

Newtonian physics, or even the extension of Newtonian physics as “discovered” by Einstein with Relativity theory in the beginning of the twentieth century (a theory whose accuracy is well established via experimentation at this point), assumes that particles, things made up of mass, energy and momentum exist independent of the observer or their instruments of observation, are presumed to exist in continuous form, moving along specific trajectories and whose properties (mass, velocity, etc.) can only be changed by the action of some force upon which these things or objects are affected. This is the essence of Newtonian mechanics upon which the majority of modern day physics, or at least the laws of physics that affect us here at a human scale, is defined and philosophically falls into the realm of realism and determinism.

The only caveat to this view that was put forth by Einstein is that these measurements themselves, of speed or even mass or energy content of a specific object can only be said to be universally defined according to these physical laws within the specific frame of reference of an observer. Their underlying reality is not questioned – these things clearly exist independent of observation or measurement, clearly (or so it seems) – but the values, or the properties of these things is relative to a frame of reference of the observer. This is what Relativity tells us. So the velocity of a massive body, and even the measurement of time itself which is a function of distance and speed, is a function of the relative speed and position of the observer who is performing said measurement. For the most part, the effects of Relativity can be ignored when we are referring to objects on Earth that are moving at speeds that are minimal with respect to the speed of light and are less massive than say black holes. As we measure things at the cosmic scale, where distances are measured in terms of light years and black holes and other massive phenomena exist which bend spacetime, aka singularities, the effects of Relativity cannot be ignored.[3]

Leaving aside the field of cosmology for the moment and getting back to the history of the development of quantum mechanics (which arguably is integrally related to cosmology at a basic level), at the end of the 19th century Planck was commissioned by electric companies to create light bulbs that used less energy, and in this context was trying to understand how the intensity of electromagnetic radiation emitted by a black body (an object that absorbs all electromagnetic radiation regardless of frequency or angle of incidence) depended on the frequency of the radiation, i.e. the color of the light. In his work, and after several iterations of hypothesis that failed to have predictive value, he fell upon the theory that energy is only absorbed or released in quantized form, i.e. in discrete packets of energy he referred to as “bundles” or” energy elements”, the so called Planck postulate. And so the field of quantum mechanics was born.[4]

Despite the fact that Einstein is best known for his mathematical models and theories for the description of the forces of gravity and light at a cosmic scale, i.e. Relativity, his work was also instrumental in the advancement of quantum mechanics as well. For example, in his work in the effect of radiation on metallic matter and non-metallic solids and liquids, he discovered that electrons are emitted from matter as a consequence of their absorption of energy from electromagnetic radiation of a very short wavelength, such as visible or ultraviolet radiation. Einstein established that in certain experiments light appeared to behave like a stream of tiny particles that he called photons, not just a wave, lending more credence and authority to the particle theories describing of quantum realm. He therefore hypothesized the existence of light quanta, or photons, as a result of these experiments, laying the groundwork for subsequent wave-particle duality discoveries and reinforcing the discoveries of Planck with respect to black body radiation and its quantized behavior.[5]

Wave-Particle Duality and Wavefunction Collapse

Prior to the establishment of light’s properties as waves, and then in turn the establishment of wave like characteristics of subatomic elements like photons and electrons by Louis de Broglie in the 1920s, it had been fairly well established that these subatomic particles, or electrons or photons as they were later called, behaved like particles. However the debate and study of the nature of light and subatomic matter went all the way back to the 17th century where competing theories of the nature of light were proposed by Isaac Newton, who viewed light as a system of particles, and Christiaan Huygens who postulated that light behaved like a wave. It was not until the work of Einstein, Planck, de Broglie and other physicists of the twentieth century that the nature of these subatomic particles, both light and electrons, were proven to behave both like particles and waves, the result dependent upon the experiment and the context of the system which being observed. This paradoxical principle known as wave-particle duality is one of the cornerstones, and underlying mysteries, of the implications of the reality described by Quantum Theory.

As part of the discoveries of subatomic particle wave-like behavior, what Planck discovered in his study of black body radiation (and Einstein as well within the context of his study of light and photons) was that the measurements or states of a given particle such as a photon or an electron, had to take on values that were multiples of very small and discrete quantities, i.e. non-continuous, the relation of which was represented by a constant value known as the Planck constant[6].

In the quantum realm then, there was not a continuum of values and states of matter as was assumed in physics up until that time, there were bursts of energies and changes of state that were ultimately discrete, and yet fixed, where certain states and certain values could in fact not exist, representing a dramatic departure from the way most of think about movement and change in the “real world” and most certainly a significant departure from Newtonian mechanics upon which Relativity was based.[7]

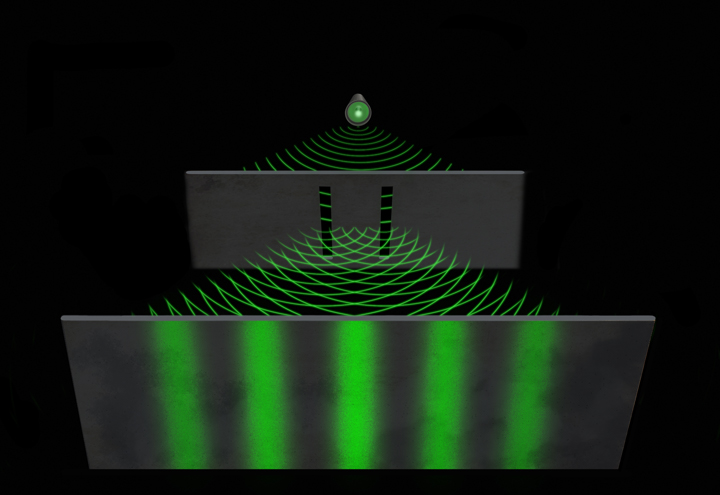

The classic demonstration of light’s behavior as a wave, and perhaps one of the most astonishing experiments of all time, is illustrated in what is called the double-slit experiment[8]. In the basic version of this experiment, a light source such as a laser beam is shone at a thin plate that that is pierced by two parallel slits. The light in turn passes through each of the slits and displays on a screen behind the plate. The image that is displayed on the screen behind the plate is not one of a constant band of light that passes through each one of the slits as you might expect if the light were simply a particle or sets of particles, the light displayed on the screen behind the double-slitted slate is one of light and dark bands, indicating that the light is behaving like a wave and is subject to interference, the strength of the light on the screen cancelling itself out or becoming stronger depending upon how the individual waves interfere with each other. This behavior is exactly akin to what we consider fundamental wavelike behavior, for example like the nature of waves in water where the waves have greater strength if they synchronize correctly (peaks of waves) and cancel each other out (trough of waves) if not.

What is even more interesting however, and was most certainly unexpected, is that once equipment was developed that could reliably send a single particle (electron or photon for example, the behavior was the same) through a double-slitted slate, these photons did end up at a single location on the screen after passing through one of the slits as was expected, but the location on the screen, as well as which slit the particle appeared to pass through (in later versions of the experiment which slit “it” passed through could in fact be detected) seemed to be somewhat random. What researchers found as more and more of these subatomic particles were sent through the slate one at a time, the same wave like interference pattern emerged that showed up when the experiment was run with a full beam of light as was done by Young some 100 years prior.

So hold on for a second, Charlie had gone over this again and again, and according to all the literature he read on quantum theory and quantum mechanics they all pretty much said the same thing, namely that the heart of the mystery of quantum mechanics could be seen in this very simple experiment. And yet it was really hard to, perhaps impossible, to understand what was actually going on, or at least understand without abandoning some of the very foundational principles of physics, like for example that these things called subatomic particles actually existed, because they seemed to behave like waves. Or did they?

What was clear was that this subatomic particle, corpuscle or whatever you wanted to call it, did not have a linear and fully deterministic trajectory in the classical physics sense, this much was very clear due to the fact that the distribution against the screen when they were sent through the double slits individually appeared to be random. But what was more odd was that when the experiment was run one corpuscle at a time, not only was the final location on the screen seemingly random individually, but the same pattern emerged after many, many single experiment runs as when a full wave, or set of these corpuscles, was sent through the double slits. So not only did the individual photon seem to be aware of the final wave like pattern of its parent wave, but that this corpuscle appeared to be interfering with itself when it went through the two slits individually. What? What the heck was going on here?

Furthermore, to make things even more mysterious, as the final location of each of the individual photons in the two slit and other related experiments was evaluated and studied, it was discovered that although the final location of an individual one of these particles could not be determined exactly before the experiment was performed, i.e. there was a fundamental element of uncertainty or randomness involved at the individual corpuscle level that could not be escaped, it was discovered that the final locations of these particles measured in toto after many experiments were performed exhibited statistical distribution behavior that could be modeled quite precisely, precisely from a mathematical statistics and probability distribution perspective. That is to say that the sum total distribution of the final locations of all the particles after passing through the slit(s) could be established stochastically, i.e. in terms of well-defined probability distribution consistent with probability theory and well defined mathematics that governed statistical behavior. So in total you could predict at some sense what the behavior would look like over a large distribution set even if you couldn’t predict what the outcome would look like for an individual corpuscle.

The mathematics behind this particle distribution that was discovered is what is known as the wave function, typically denoted by the mathematical symbol the Greek letter psi, ψ or its capital equivalent Ψ, which predicts what the probability distribution of these “particles” will look like on the screen behind the slate over a given period of time after many individual experiments are run, or in quantum theoretical terms the wavefunction predicts the quantum state of a particle throughout a fixed spacetime interval. This very foundational and groundbreaking equation was discovered by the Austrian physicist Erwin Schrödinger in 1925, published in 1926, and is commonly referred to in the scientific literature as the Schrödinger equation, analogous in the field of quantum mechanics to Newton’s second law of motion in classical physics.

With the discovery of the wave function, or wavefunction, it now became possible to predict the potential locations or states of motions of these subatomic particles, an extremely potent theoretical model that has led to all sorts of inventions and technological advancements in the twentieth century and beyond. This wavefunction represents a probability distribution of potential states or outcomes that describe the quantum state of a particle and predicts with a great degree of accuracy the potential location of a particle given a location or state of motion.

Again, this implied that individual corpuscles were interfering with themselves when passing through the two slits on the slate which was very odd indeed. In other words, the individual particles were exhibiting wave like characteristics even when they were sent through the double-slitted slate one at a time. This phenomenon was shown to occur with atoms as well as electrons and photons, confirming that all of these subatomic so-called particles exhibited wave like properties as well as their particle like qualities, the behavior observed determined upon the type of experiment, or measurement as it were, that the “thing” was subject to.

As Louis De Broglie, the physicist responsible for bridging the theoretical gap between the study of corpuscles (particles, matter or atoms) and waves by establishing the symmetric relation between momentum and wavelength which had at its core Planck’s constant, i.e. the De Broglie equation, described this mysterious and somewhat counterintuitive relationship between wave and particle like behavior:

A wave must be associated with each corpuscle and only the study of the wave’s propagation will yield information to us on the successive positions of the corpuscle in space[9].

So by the 1920s then, you have a fairly well established mathematical theory to govern the behavior of subatomic particles, backed by a large body of empirical and experimental evidence, that indicates quite clearly that what we would call “matter” (or particles or corpuscles) in the classical sense, behaves very differently, or at least has very different fundamental characteristics, in the subatomic realm. It exhibits properties of a particle, or a thing or object, as well as a wave depending upon the type of experiment that is run. So the concept of matter itself then, as we had been accustomed to dealing with and discussing and measuring for some centuries, at least as far back as the time of Newton (1642-1727), had to be reexamined within the context of quantum mechanics. For in Newtonian physics, and indeed in the geometric and mathematical framework within which it was developed and conceived which went back to ancient times (Euclid 300 BCE), matter was presumed to be either a particle or a wave, but most certainly not both.

What even further complicated matters was that matter itself, again as defined by Newtonian mechanics and its extension via Relativity Theory, taken together what is commonly referred to as classical physics, was presumed to have some very definite, well-defined and fixed, real properties. Properties like mass, location or position in space, and velocity or trajectory were all presumed to have a real existence independent of whether or not they were measured or observed, even if the actual values were relative to the frame of reference of the observer. All of this hinged upon the notion that the speed of light was fixed no matter what the frame of reference of the observer of course, this was a fixed absolute, nothing could move faster than the speed of light. Well even this seemingly self-evident notion, or postulate one might call it, ran into problems as scientists continued to explore the quantum realm.

So by the 1920s then, the way scientists looked at and viewed matter as we would classically consider it within the context of Newton’s postulates from the early 1700s which were extended further into the notion of spacetime as put forth by Einstein, was encountering some significant difficulties when applied to the behavior of elements in the subatomic, quantum, world. Furthermore, there was extensive empirical and scientific evidence which lent significant credibility to quantum theory, which illustrated irrefutably that these subatomic elements behaved not only like waves, exhibiting characteristics such as interference and diffraction, but also like particles in the classic Newtonian sense that had measurable, well defined characteristics that could be quantified within the context of an experiment.

In his Nobel Lecture in 1929, Louis de Broglie, summed up the challenge for physicists of his day, and to a large extent physicists of modern times, given the discoveries of quantum mechanics as follows:

The necessity of assuming for light two contradictory theories-that of waves and that of corpuscles – and the inability to understand why, among the infinity of motions which an electron ought to be able to have in the atom according to classical concepts, only certain ones were possible: such were the enigmas confronting physicists at the time…[10]

Uncertainty, Entanglement, and the Cat in a Box

The other major tenet of quantum theory that rests alongside wave-particle duality, and that provides even more complexity when trying to wrap our minds around what is actually going on in the subatomic realm, is what is sometimes referred to as the uncertainty principle, or the Heisenberg uncertainty principle, named after the German theoretical physicist Werner Heisenberg who first put forth the theories and models representing the probability distribution of outcomes of the position of these subatomic particles in certain experiments like the double-slit experiment previously described, even though the wave function itself was the discovery of Schrödinger.

The uncertainty principle states that there is a fundamental limit on the accuracy with which certain pairs of physical properties of atomic particles, position and momentum being the classical pair for example, that can be known at any given time with certainty. In other words, physical quantities come in conjugate pairs, where only one of the measurements of a given pair can be known precisely at any given time. In other words, when one quantity in a conjugate pair is measured and becomes determined, the complementary conjugate pair becomes indeterminate. In other words, what Heisenberg discovered, and proved, was that the more precisely one attempts to measure one of these complementary properties of subatomic particles, the less precisely the other associated complementary attribute of the element can be determined or known.

Published by Heisenberg in 1927, the uncertainty principle states that they are fundamental, conceptual limits of observation in the quantum realm, another radical departure from the principles of Newtonian mechanics which held that all attributes of a thing were measurable at any given time, i.e. existed or were real. The uncertainty principle is a statement on the fundamental property of quantum systems as they are mathematically and theoretically modeled and defined, and of course empirically validated by experimental results, not a statement about the technology and method of the observational systems themselves. This is an important point. This wasn’t a theoretical problem, or a problem with the state of instrumentation that was being used for measurement, it was a characteristic of the domain itself.

Max Born, who won the Nobel Prize in Physics in 1954 for his work in quantum mechanics, specifically for his statistical interpretations of the wave function, describes this now other seemingly mysterious attribute of the quantum realm as follows (the specific language he uses reveals at some level his interpretation of the quantum theory, more on interpretations later):

…To measure space coordinates and instants of time, rigid measuring rods and clocks are required. On the other hand, to measure momenta and energies, devices are necessary with movable parts to absorb the impact of the test object and to indicate the size of its momentum. Paying regard to the fact that quantum mechanics is competent for dealing with the interaction of object and apparatus, it is seen that no arrangement is possible that will fulfill both requirements simultaneously.[11]

Whereas classical physicists, physics prior to the introduction of relativity and quantum theory, distinguished between the study of particles and waves, the introduction of quantum theory and wave-particle duality established that this classic intellectual bifurcation of physics at the macroscopic scale was wholly inadequate in describing and predicting the behavior of these “things” that existed in the subatomic realm, all of which took on the characteristics of both waves and particles depending upon the experiment and context of the system being observed. Furthermore the actual precision within which a state of a “thing” in the subatomic world could be defined was conceptually limited, establishing a limit to which the state of a given subatomic state could be defined, another divergence from classical physics. And then on top of this, was the requirement of the mathematical principles of statistics and probability theory, as well as significant extensions to the underlying geometry, to describe and model the behavior at this scale, all calling into question our classical materialistic notions and beliefs that we had held so dear for centuries.

Even after the continued refinement and experimental evidence that supported Quantum Theory however, there did arise some significant resistance to the completeness of the theory itself, or at least questions as to its true implications with respect to Relativity and Newtonian mechanics. The most notable of these criticisms came from Einstein himself, most infamously encapsulated in a paper he co-authored with two of his colleagues Boris Podolsky and Nathan Rosen published in 1936 which came to be known simply as the EPR paper, or simply the EPR paradox, which called attention to what they saw as the underlying inconsistencies of the theory that still required explanation. In this paper they extended some of the quantum theoretical models to different thought experiments/scenarios to yield what they considered to be at very least improbable, if not impossible, conclusions.

They postulated that given the formulas and mathematical models that described the current state of quantum theory, i.e. the description of a wave function that described the probabilistic outcomes for a given subatomic system, that if such a system were transformed into two systems – split apart if you will – by definition both systems would then be governed by the same wave function and whose subsequent behavior and state would be related, no matter what their separation in spacetime, violating one of the core tenets of classically physics, namely communication faster than the speed of light. This was held to be a mathematically true and consistent with quantum theory, although at the time could not be validated via experiment.

They went on to show that if this is true, it implies that if you have a single particle system that is split into two separate particles and subsequently measured, these two now separate and distinct particles would then be governed by the same wave function, and in turn would be governed by the same uncertainty principles outlined by Heisenberg; namely that a defined measurement of a particle in system A will cause its conjugate value in system B to be undeterminable or “correlated”, even if the two systems had no classical physical contact with each other and were light years apart from each other.

But hold on a second, how could this be possible? How could you have two separate “systems”, governed by the same wave function, or behavioral equation so to speak, that no matter how far apart they were, or no matter how much time elapsed between measurements, that you had a measurement in one system which fundamentally correlated with (or uncorrelated with, the argument is the same) a measurement in the other system that its separate from? They basically took the wave function theory, which governs behavior of quantized particles, and its corresponding implication of uncertainty as outlined most notably by Heisenberg, and extended it to multiple, associated and related subatomic systems, related and governed by the same wave function despite their separation in space (and time) yielding a very awkward and somewhat unexplainable result, at least unexplainable in terms of classic physics.

The question they raised boiled down to, how could you have two unrelated, distant systems whose measurements or underlying structure depended upon each other in a very well-defined and mathematically and (theoretically at the time but subsequently verified via experiment) empirically measurable way? Does that imply that these systems are communicating in some way either explicitly or implicitly? If so that would seem to call into question the principle of the fixed speed of light that was core to Relativity Theory. The other alternative option seemed to be that the theory was incomplete in some way, which was Einstein’s view. Were there “hidden”, yet to be discovered variables that governed the behavior of quantum systems that had yet to be discovered, what came to be known in the literature as hidden variable theories?

If it were true, and in the past half century or so many experiments have verified this, it is at the very least extremely odd behavior, or perhaps better put reflected very odd characteristics, certainly inconsistent with prevailing theories of physics. Or at least characteristics that we have come to not expect in our descriptions of “reality” that we had grown accustomed to expect. Are these two subsystems, once correlated, communicating with each other? Is there some information that is being passed between them that violates the speed of light boundary that forms the cornerstone of modern, classical physics? This seems unlikely, and most certainly is something that Einstein felt uncomfortable with. This “spooky action at a distance”, which is what Einstein referred to it as, seemed literally to defy the laws of physics. But the alternative appeared to be that this notion of what we consider to be “real”, at least as it was classically defined, would need to be modified in some way to take into account this correlated behavior between particles or systems that were physically separated beyond classical boundaries.

From Einstein’s perspective, two possible explanations for this behavior were put forth, 1) either there existed some model of behavior of the interacting systems/particles that was still yet undiscovered, so called hidden variables, or 2) the notion of locality, or perhaps more aptly put as the tenet of local determinism (which Einstein and others associated directly and unequivocally with reality), which underpinned all of classical physics had to be drastically modified if not completely abandoned.

In Einstein’s words however, the language for the first alternative that he seemed to prefer was not that there were hidden variables per se, but more so that quantum theory as it stood in the first half of the twentieth century was incomplete. That is to say that some variable, coefficient or hidden force was missing from quantum theory which was the driving force behind the correlated behavior of the attributes of these physically separate particles that were separate beyond classical means of communication in any way. For Einstein it was the completeness option that he preferred, unwilling to consider the idea that the notion of locality was not absolute. Ironically enough, hindsight being twenty-twenty and all, Einstein had just postulated that there was no such thing as absolute truth, or absolute reality, on the macroscopic and cosmic physical plane with Relativity Theory, so one might think that he would have been more open to relaxing this requirement in the quantum realm, but apparently not, speaking to the complexities and subtleties of quantum theory implications even for some of the greatest minds of the time.

Probably the most widely known metaphor that illustrated Einstein and other’s criticism of quantum theory is the thought experiment, or paradox as it is sometimes referred to as, called Schrödinger’s cat, or Schrödinger’s cat paradox.[12] In this thought experiment, which according to tradition emerged out of discussions between Schrödinger and Einstein just after the EPR paper was published, a cat is placed in a fully sealed and fully enclosed box with a radioactive source subject to certain measurable and quantifiable rate of decay, a rate that is presumably less than the life time of a cat. In the box with the cat is one internal radioactive monitor which measures any radioactive particles in the box (# of radioactive particles <= 1), and flask of poison that is triggered by the radioactivity monitor if it is triggered. According to quantum theory which governs the rate of decay with some random probability distribution over time, it is impossible to say at any given moment, until the box is opened in fact, whether or not the cat is dead or alive. But how could this be? The cat is in an undefined state until the box is opened? There is nothing definitive that we can say about the state of the cat independent of actually opening the box? The calls into question, bringing the analogy to the macroscopic level, whether or not according to quantum theory reality can be defined independent of observation (or measurement) within the context of the cat, the box and the radioactive particle and its associated monitor.

In the course of developing this experiment, Schrödinger coined the term entanglement[13], one of the great still yet to be solved mysteries, or perhaps better called paradoxes, that exist to this day in quantum theory/mechanics. Mysterious not in the sense as to whether or not the principle actually exists, entanglement has been verified in a variety of physically verifiable experiments as outlined in the EPR paper and illustrated in the cat paradox and is accepted as a scientific fact in the physics community, but a mystery in the sense as to how this can be possible given that it seems, at least on the face of it, to fly in the face of classical Newtonian mechanics, almost determinism itself actually. Schrödinger himself is probably the best person to turn to understand quantum entanglement and he describes it as:

When two systems, of which we know the states by their respective representatives, enter into temporary physical interaction due to known forces between them, and when after a time of mutual influence the systems separate again, then they can no longer be described in the same way as before, viz. by endowing each of them with a representative of its own. I would not call that one but rather the characteristic trait of quantum mechanics, the one that enforces its entire departure from classical lines of thought. By the interaction the two representatives [the quantum states] have become entangled.[14]

The principle of entanglement calls into question of what is known as local realism; “local” in the sense that all the behaviors and datum of a given system are determined by the qualities or attributes of those objects within that given system bounded by spacetime as defined by Newtonian mechanics and Relativity or some force that is acting upon said system, and “real” in the sense that the system itself exists independent of observation or apparatus/elements of observation.

Taking the non-local theory explanation to the extreme, and something which has promoted quite a bit of what can reasonably be called hysterical reaction in some academic and pseudo-academic communities even to this day, is that the existence of proven correlation of two pairs of entities that are separated in spacetime far enough from each other so that the speed of light boundary could not be crossed, if the two separated particles do indeed seem to hold a distinct and mathematically predictable correlation, i.e. this notion of entanglement “action at a distance” as it is sometimes called, then all of classical physics is called into question. Einstein specifically called out these “spooky at a distance” theories as defunct, he so believed in the invariable tenets of Relativity, and it’s hard to argue with his position quite frankly because correlation does not necessarily imply communication. But if local realism and its underlying tenets of determinism are to be held fast to, then where does that leave quantum theory?

This problem gets somewhat more crystallized, or well defined, in 1964 when the physicist John Stewart Bell (1928-1990) in his seminal paper entitled “On the Einstein Podolsky Rosen Paradox”, takes the EPR argument one step further and asserts, proves mathematically via a reductio ad absurdum argument, that if quantum theory is true, that in fact no hidden parameter or variable theory could possibly exist that reproduces all of the predictions of quantum mechanics and is also consistent with locality[15]. In other words, Bell asserted that the hidden variable hypothesis, or at the very least a broad category of hidden variable hypotheses, was incompatible with quantum theory itself, unless the notion of locality was abandoned or at least relaxed to some extent. In his own words:

In a theory in which parameters are added to quantum mechanics to determine the results of individual measurements, without changing the statistical predictions, there must be a mechanism whereby the setting of one measuring device can influence the reading of another instrument, however remote. Moreover, the signal involved must propagate instantaneously, so that a theory could not be Lorentz invariant.[16]

This assertion is called Bell’s theorem and it posits that quantum mechanics and the concept of locality, which again states that an object is influenced directly only by its immediate surroundings and is a cornerstone of the theories of Newton and Einstein regarding the behavior of matter and the objective world, are mathematically incompatible and inconsistent with each other, providing further impetus as it were, that this classical notion of locality was in need of closer inspection, modification or perhaps even abandoned entirely.

Although there still exists some debate among physicists as to whether or not there is enough experimental evidence to prove out Bell’s theorem beyond a shadow of a doubt, it seems to be broadly accepted in the scientific community that this property of entanglement exists beyond classical physical boundaries. However, the question as to whether or not all types of hidden variable theories are ruled out by Bell’s theorems appears to be a legitimate question and is still up for debate, and perhaps this loop hole more so than any other is the path which Bohm and Hiley take with their Causal, or Ontological Interpretation of Quantum Theory (more below).

Criticisms of Bell’s theorem and the related experiments aside however, if you believe quantum theory, and you’d be hard pressed not to at this point, you must conclude that the theory violates and is inconsistent with Relativity in some way, a rather disconcerting and problematic conclusion for the twentieth century physicist to say the least and a problem which plagues, and motivates, many modern theoretical physicists to this day.

Quantum Theory then, as expressed with Bell’s theorem, Heisenberg’s uncertainty principle and this idea of entanglement, asserts that there exists a level of interconnectedness between physically disparate systems that defies at least some level the classical physics notion of deterministic locality, pointing to either the incompleteness of quantum theory or to the requirement of some sort of non-trivial modification of the concept of local realism which has underpinned classical physics for the last few centuries if not longer.

In other words, the implications of quantum theory, a theory that has very strong predictive and experimental evidence which backs up the soundness and strength of the underlying math, is that there is something else is at work that connects the state of particles or things at the subatomic scale that cannot be altogether described, pinpointed, or explained. Einstein himself struggles with this notion even toward the end of his lifetime in 1954 when he says:

…The following idea characterizes the relative independence of objects far apart in space, A and B: external influence on A has no direct influence on B; this is known as the Principle of Local Action, which is used consistently only in field theory. If this axiom were to be completely abolished, the idea of the existence of quasi enclosed systems, and thereby the postulation of laws which can be checked empirically in the accepted sense, would become impossible….[17]

Interpretations of Quantum Theory: Back to First Philosophy

There is no question as to the soundness of the mathematics behind quantum theory and there is now a very large body of experimental evidence that supports the underlying mathematics, including empirical evidence of not only the particle behavior that it intends to describe (as in the two slit experiment for example), but also experimental evidence that validates Bell’s theorem and the EPR Paradox. What is somewhat less clear however, and what arguably may belong more to the world of metaphysics and philosophy rather than physics, is how quantum theory is to be interpreted as a representation of reality given the state of affairs that it introduces. What does quantum theory tell us about the world we live in, irrespective of the soundness of its predictive power? This is a question that physicists, philosophers and even theologians have struggled with since the theory has gained wide acceptance and prominence in the scientific community since the 1930s.

There are many interpretations of quantum theory but there are three in particular that Charlie thought deserved attention due primarily to a) their prevalence or acceptance in the academic community, and/or b) their impact on scientific or philosophical inquiry into the limits of quantum theory.

The standard, orthodox interpretation of quantum theory and the one most often compared to when differing interpretations to quantum theory are put forth is most commonly referred to as the Copenhagen Interpretation which renders the theoretical boundaries of interpretation of the theory to the experiment itself, the Many-worlds (or Many-minds) interpretation which explores the boundaries of the nature of reality proposing in some extreme variants the existence of multiple universes/realities simultaneously, and the Causal Interpretation which is also sometimes called De Broglie-Bohm theory or Bohmian mechanics, which extends the theory to include the notion of quantum potential and at the same time abandons the classical notion of locality but still preserves objective realism and determinism.[18]

The most well established and most commonly accepted interpretation of Quantum Theory, the one that is most often taught in schools and textbooks and the one that most alternative interpretations are compared against, is the Copenhagen Interpretation[19]. The Copenhagen interpretation holds that the theories of quantum mechanics do not yield a description of an objective reality, but deal only with sets of probabilistic outcomes of experimental values borne from experiments observing or measuring various aspects of energy quanta, entities that do not fit neatly into classical interpretations of mechanics. The underlying tenet here is that the act of measurement itself, the observer (or by extension the apparatus of observation) causes the set of probabilistic outcomes to converge on a single outcome, a feature of quantum mechanics commonly referred to as wavefunction collapse, and that any additional interpretation of what might actually be going on, i.e. the underlying reality, defies explanation and the interpretation of which is in fact inconsistent with the fundamental mathematical tenets of the theory itself.

In this interpretation of quantum theory, reality (used here in the classical sense of the term as existing independent of the observer) is a function of the experiment, and is defined as a result of the act of observation and has no meaning independent of measurement. In other words, reality in the quantum world from this point of view does not exist independent of observation, or put somewhat differently, the manifestation of what we think of or define as “real” is intrinsically tied to and related to the act of observation of the system itself.

Niels Bohr has been one of the strongest proponents of this interpretation, an interpretation which refuses to associate any metaphysically implications with the underlying physics. He holds that given this proven interdependence between that which was being observed and the act of observation, no metaphysical interpretation can in fact be extrapolated from the theory, it is and can only be a tool to describe and measure states and particle/wave behavior in the subatomic realm that are made as a result of some well-defined experiment, i.e. that attempting to make some determination as to what quantum theory actually meant, violated the fundamental tenets of the theory itself. From Bohr’s perspective, the inability to draw conclusions beyond the results of the experiments which the theory covers was a necessary conclusion of the theorem’s basic tenets and that was the end of the matter. This view can be seen as the logical conclusion of the notion of complementarity, one of the fundamental and intrinsic features of quantum mechanics that makes it so mysterious and hard to describe or understand in classical terms.

Complementarity, which is closely tied to the Copenhagen interpretation, expresses the notion that in the quantum domain the results of the experiments, the values yielded (or observables) were fundamentally tied to the act of measurement itself and that in order to obtain a complete picture of the state of any given system, as bound by the uncertainty principle, one would need to run multiple experiments across a given system, each result in turn rounding out the notion of the state, or reality of said system. These combined features of the theory said something profound about the underlying uncertainty of the theory itself. Perhaps complementarity can be viewed as the twin of uncertainty, or its inverse postulate. Bohr summarized this very subtle and yet at the same time very profound notion of complementarity in 1949 as follows:

…however far the [quantum physical] phenomena transcend the scope of classical physical explanation, the account of all evidence must be expressed in classical terms. The argument is simply that by the word “experiment” we refer to a situation where we can tell others what we have learned and that, therefore, the account of the experimental arrangements and of the results of the observations must be expressed in unambiguous language with suitable application of the terminology of classical physics.

This crucial point…implies the impossibility of any sharp separation between the behavior of atomic objects and the interaction with the measuring instruments which serve to define the conditions under which the phenomena appear…. Consequently, evidence obtained under different experimental conditions cannot be comprehended within a single picture, but must be regarded as complementary in the sense that only the totality of the phenomena exhausts the possible information about the objects.[20]

Complementarity was in fact the core underlying principle which drove the existence of the uncertainty principle from Bohr’s perspective; it was the underlying characteristic and property of the quantum world that captured at some level its very essence. And complementarity, taken to its logical and theoretical limits, did not allow or provide any framework for describing, any definition of the real world outside of the domain within which it dealt with, namely the measurement values or results, the measurement instruments themselves, and the act of measurement itself.

Another interpretation or possible question to be asked given the uncertainty implicit in Quantum Theory, was that perhaps all possible outcomes as described in the wave function did in some respect manifest even if they call could not be seen or perceived in our objective reality. This premise underlies an interpretation of quantum theory that has gained some prominence in the last few decades, especially within the computer science and computational complexity fields, and has come to be known as the Many-Worlds interpretation.

This original formulation of this theory was laid out by Hugh Everett in his PHD thesis in 1957 in a paper entitled The Theory of the Universal Wave Function wherein he referred to the interpretation not as the many-worlds interpretation but as the Relative-State formulation of Quantum Mechanics (more on this distinction below), but the theory was subsequently developed and expanded upon by several authors and the term many-worlds sort of stuck.[21]

In Everett’s original exposition of the theory, he begins by calling out some of the problems with the original, or classic, interpretation of quantum mechanics; specifically what he and other members of the physics community believed to be the artificial creation of the wavefunction collapse construct to explain quantum uncertain to deterministic behavior transitions, as well as the difficulty that this interpretation had in dealing with systems that consisted of more than one observer, as the main drivers for an alternative viewpoint of the interpretation of the quantum theory, or what he referred to as a metatheory given that the standard interpretation could be derived from it.

Although Bohr, and presumably Heisenberg and von Neumann, whose collective views on the interpretation of quantum theory make up what is now commonly referred to as the Copenhagen Interpretation of quantum theory, would no doubt explain away these seemingly contradictory and inconsistent problems with as out of scope of the theory itself (i.e. quantum theory is a theory that is intellectually and epistemologically bound by the experimental apparatus and their subsequent results which provide the scope of the underlying mechanics), Everett finds this view lacking as it fundamentally prevents us from any true explanation as to what the theory says about “reality”, or the real world as it were, a world considered to be governed by the laws of classic physics where things and objects exists independent of observers and have real, static measurable and definable qualities, a world fundamentally incompatible with the stochastic and uncertain characteristics that governed the behavior of “things” in the subatomic or quantum realm.

The aim is not to deny or contradict the conventional formulation of quantum theory, which has demonstrated its usefulness in an overwhelming variety of problems, but rather to supply a new, more general and complete formulation, from which the conventional interpretation can be deduced.[22]

Everett’s starts by making the following basic assumptions from which he devises his somewhat counter intuitive but yet now relatively widely accepted standard interpretations of quantum theory are 1) all physical systems large or small, can be described as states within Hilbert space, the fundamental geometric framework upon which quantum mechanics is constructed, 2) that the concept of an observer can be abstracted to be a machine like entity with access to unlimited memory which stores a history of previous states, or previous observations, and has the ability to made deductions, or associations, regarding actions and behavior solely based upon this memory and this simple deductive process thereby incorporating observers and acts of observation (i.e. measurement) completely into the model, and 3) with assumptions 1 and 2, the entire state of the universe, which includes the observers within it, can be described in a consistent, coherent and fully deterministic fashion without the need of the notion of wavefunction collapse, or any additional assumptions for that matter.

Everett makes what he calls a simplifying assumption to quantum theory, i.e. removing the need for or notion of wavefunction collapse, and assumes the existence of a universal wave function which accounts for and describes the behavior of all physical systems and their interaction in the universe, absorbing the observer and the act of observation into the model, observers being simply another form of a quantum state that interacts with the environment. Once these assumptions are made, he can then abstract the concept of measurement as just interactions between quantum systems all governed by this same universal wave function. In Everett’s metatheory, the notion of what an observer means and how they fit into the overall model are fully defined, and the challenge stemming from the seemingly arbitrary notion of wavefunction collapse is resolved.

In Everett’s view, there exists a universal wavefunction which corresponds to an objective, deterministic reality and the notion of wavefunction collapse as put forth by von Neumann (and reflective of the standard interpretation of quantum mechanics) represents not a collapse so to speak, but represents a manifestation of the realization of one possible outcome of measurement that exists in our “reality”, or multi-verse.

But from Everett’s perspective, if you take what can be described as a literal interpretation of the wavefunction as the overarching description of reality, this implies that the rest of the possible states reflected in the wave function of that system do not cease to exist with the act of observation, with the collapse of the quantum mechanical wave that describes said system state in Copenhagen quantum mechanical nomenclature, but that these other states do have some existence that persists but are simply not perceived by us. In his own words, and this is a subtle yet important distinction between Everett’s view and the view of subsequent proponents of the many-worlds interpretation, they remain uncorrelated with the observer and therefore they do not exist in their manifest reality.

We now consider the question of measurement in quantum mechanics, which we desire to treat as a natural process within the theory of pure wave mechanics. From our point of view there is no fundamental distinction between “measuring apparata” and other physical systems. For us, therefore, a measurement is simply a special case of interaction between physical systems – an interaction which has the property of correlating a quantity in one subsystem with a quantity in another.[23]

This implies of course that these unperceived states do have some semblance of reality, that they do in fact exists as possible realities, realities that are thought to have varying levels of “existence” depending upon which version of the many-worlds interpretation you adhere to. With DeWitt and Deutsch for example, a more literal, or “actual” you might say, interpretation of Everett’s original theory is taken, where these other states, these other realities or multi-verses, do in fact physical exist even though they cannot be perceived or validated by experiment.[24] This is a more literal interpretation of Everett’s thesis however, because nowhere does Everett explicitly state that these other universes actually exist, what he does say on the matter seems to imply the existence of “possible” or potential universes that reflect non-measured or non-actualized states of physical systems, but not these unrealized outcomes actually exists in some physical universe:

In reply to a preprint of this article some correspondents have raised the question of the “transition from possible to actual,” arguing that in “reality” there is—as our experience testifies—no such splitting of observer states, so that only one branch can ever actually exist. Since this point may occur to other readers the following is offered in explanation.

The whole issue of the transition from “possible” to “actual” is taken care of in the theory in a very simple way—there is no such transition, nor is such a transition necessary for the theory to be in accord with our experience. From the viewpoint of the theory all elements of a superposition (all “branches”) are “actual,” none any more “real” than the rest. It is unnecessary to suppose that all but one are somehow destroyed, since all the separate elements of a superposition individually obey the wave equation with complete indifference to the presence or absence (“actuality” or not) of any other elements. This total lack of effect of one branch on another also implies that no observer will ever be aware of any “splitting” process.

Arguments that the world picture presented by this theory is contradicted by experience, because we are unaware of any branching process, are like the criticism of the Copernican theory that the mobility of the earth as a real physical fact is incompatible with the common sense interpretation of nature because we feel no such motion. In both cases the argument fails when it is shown that the theory itself predicts that our experience will be what it in fact is. (In the Copernican case the addition of Newtonian physics was required to be able to show that the earth’s inhabitants would be unaware of any motion of the earth.)[25]

According to this view, the act of measurement of a quantum system, and its associated principles of uncertainty and entanglement, is simply the reflection of this splitting off of the observable universe from a higher order notion of a multiverse where all possible outcomes and alternate histories have the potential to exist. The radical form of the many-worlds view is that these potential, unmanifest realities do in fact exist, whereas Everett seems to only go so far as to imply that they “could” exist and that conceptually their existence should not be ignored.

As hard as multiverse interpretation of quantum mechanics might be to wrap your head around, it does represent an elegant solution to some of the challenges raised by the broader physics community against quantum theory, most notable the EPR paradox and its extension to more everyday life examples as illustrated in the infamous Schrodinger’s cat paradox. It does however raise some significant questions as to his theory of mind and subjective experience, a notion that he glosses over somewhat by abstracting observers into simple machines of sorts but nonetheless rests as a primary building block upon which his metatheory rests[26].